In the previous post you saw how to filter and remove data from a database with the SQLModel API. In this post you’ll see how to export the Event data to a comma separated value (CSV) file. And you’ll see how to complete the trip by importing CSV data into the database.

Exporting a CSV

Let’s start off with exporting the Events to a CSV file. Then we can use the import command as a sanity by importing the exported CSV.

Create a new command in a function called export_events. Adding the command decorator will add an export-events command to the CLI.

@app.command()

def export_events():

passThe export-events command will have a single required argument, the name of the file to store the exported CSV.

@app.command()

def export_events(

filename: str

):

passAnd the export-events command will also have an option. When True, the header option will include a header row in the exported CSV. And it will be True by default.

@app.command()

def export_events(

filename: str,

header: Annotated[bool, typer.Option()] = False

):

passYou can also include help text for the option with the help keyword argument. In addition, annotating the filename parameter with typer.Argument lets you add help to the argument. And adding a docstring lets you include help for the function.

@app.command()

def export_events(

filename: Annotated[str, typer.Argument(

help="File to store the CSV"

)],

header: Annotated[bool, typer.Option(

help="Include headers in the first row of the CSV"

)] = False

):

"""

Export the Events to a command separated value (CSV) file

"""

passNow take a look at the help for the export-events command

$ python main.py export-events --help

Usage: main.py export-events [OPTIONS] FILENAME

Export the Events to a command separated value (CSV) file

╭─ Arguments ─────────────────────────────────────────────────────────────╮

│ * filename TEXT File to store the CSV [default: None] [required] │

╰─────────────────────────────────────────────────────────────────────────╯

╭─Options─────────────────────────────────────────────────────────────────╮

│ --header --no-header Include headers in the first row of the CSV│

│ [default: no-header] │

│ --help Show this message and exit. │

╰─────────────────────────────────────────────────────────────────────────╯The help strings now appear in the help for the command, arguments, and options.

We’re going to use the csv module from the Python standard library to export the events. Import it at the top of the file.

import csvNext, in the export_events function, open a file using the filename argument.

with open(filename, "w") as f:Use the file handle to create a new DictWriter, found in the csv module. The DictWriter also takes the names of the column headers in the fieldnames keyword argument.

with open(filename, "w") as f:

writer = csv.DictWriter(f, fieldnames=["title", "timestamp", "private"])If the header option is True, write the header row.

with open(filename, "w") as f:

writer = csv.DictWriter(f, fieldnames=["title", "timestamp", "private"])

if header:

writer.writeheader()Get a SQLModel session and select all of the Events in the database.

with open(filename, "w") as f:

writer = csv.DictWriter(f, fieldnames=["title", "timestamp", "private"])

if header:

writer.writeheader()

with Session(engine) as session:

events = session.exec(

select(Event).order_by(Event.timestamp.asc())

).all()Iterate over the events. For each one, create a dictionary with the title, timestamp and private columns. The timestamp will need to be formatted in a string, and the boolean will be stored as an integer. The dictionary is the value to the writerow method.

with open(filename, "w") as f:

writer = csv.DictWriter(f, fieldnames=["title", "timestamp", "private"])

if header:

writer.writeheader()

with Session(engine) as session:

events = session.exec(

select(Event).order_by(Event.timestamp.asc())

).all()

for event in events:

writer.writerow(

{

"title": event.title,

"timestamp": event.timestamp.strftime("%Y-%m-%s"),

"private": 1 if event.private else 0

}

)The file is now written and we can display a confirmation to the user. It would be nice to show how many rows were exported. Using the count function in the SQLAlchemy func module, we can get that number. And then use it in the message.

from sqlalchemy import func

...

with open(filename, "w") as f:

writer = csv.DictWriter(f, fieldnames=["title", "timestamp", "private"])

if header:

writer.writeheader()

with Session(engine) as session:

events = session.exec(

select(Event).order_by(Event.timestamp.asc())

).all()

for event in events:

writer.writerow(

{

"title": event.title,

"timestamp": event.timestamp.strftime("%Y-%m-%s"),

"private": 1 if event.private else 0

}

)

event_count = session.exec(

select(func.count(Event.id))

).one()

console.print(f"Exported {event_count} row(s)", style=app_styles.success)Try it out! Suppose we have these events in the database.

| Title | Timestamp | Private |

| Past Event | January 1, 2025 | False |

| New Event | February 2, 2025 | False |

| Private Event | March 3, 2025 | True |

Using the export-events command, export the events to a file named events.csv with a header.

$ python main.py export-events --header

Exported 3 row(s)In the same directory as main.py, open the new events.csv file.

title,timestamp,private

Past Event,2025-01-01,0

New Event,2025-02-02,0

Private Event,2025-03-03,1

Importing a CSV

The import command will use the csv module as well. But instead of the DictWriter, we will use the DictReader that reads a CSV file and returns it in a dictionary-like structure.

Create the import_events function. Decorate it with the command decorator. And add the filename argument and header option similar to the export_events function.

@app.command()

def import_events(

filename: Annotated[str, typer.Argument()],

header: Annotated[bool, typer.Option()] = True

):Open the filename for reader in a with block. Use the file handle to create a new DictReader from the csv module.

with open(filename, "r") as f:

reader = csv.DictReader(f)If the header option is True, set the fieldnames on the reader. If there are no fieldnames, the reader will process every row, otherwise it will assume column headers are in the first row.

with open(filename, "r") as f:

reader = csv.DictReader(f)

if header:

reader.fieldnames = ["title", "timestamp", "private"]Next, iterate over the reader. Each row will be returned as a dictionary. Create an Event for each row. The timestamp will need to be parsed into a Python datetime.date and private will need to be turned into a boolean.

with open(filename, "r") as f:

reader = csv.DictReader(f)

if header:

reader.fieldnames = ["title", "timestamp", "private"]

events = [

Event(

title=event["title"],

timestamp=datetime.datetime.strptime(

event["timestamp"], "%Y-%m-%d"

).date(),

private=False if int(event["private"]) == 0 else True

)

for event in reader

]Create a new SQLModel session and add the events list with the add_all method. Then commit the changes. And show the user a confirmation with the number of events imported.

with Session(engine) as session:

session.add_all(events)

session.commit()

console.print(f"Imported {len(events)} event(s)", style=app_styles.success)Let’s try it out. First, delete the events.db file. Then run the import-events command.

$ python main.py import-events events.csv --header

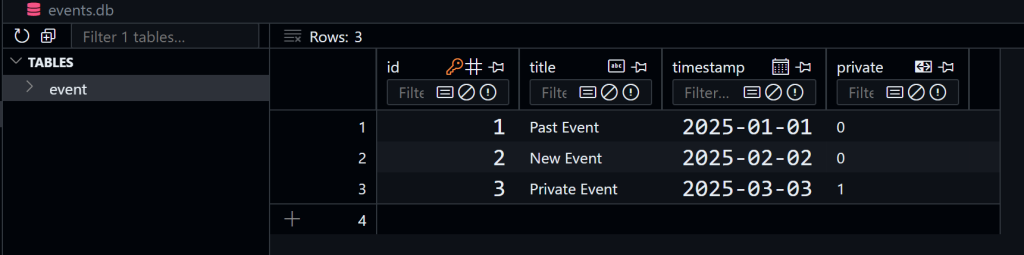

Imported 3 eventsThe events.db file will be created. Look inside to see the data. Again, if you are using Visual Studio Code you can use the SQLite Explorer extension.

Summary

In this post you saw how to use the csv module in the Python standard library, along with SQLModel, to import and export data to and from comma separated value (CSV) files. You also saw how to add help strings to options, arguments and commands in a Typer CLI.