In the previous post, you saw how to implement a complete REST API. To keep things simple, all of the data was stored in a Python dictionary. This is a less than ideal solution for a number of reasons. A real world application would use a database. FastAPI works with a number of different databases and can accommodate a flexible array of development scenarios. In this post, we will use a combination of SQLite, SQLAlchemy, and Alembic, in cooperation with the Pydantic schemas from the previous post to refactor the application to use a database to store the todo list.

Application Structure

As this application is more complex than the previous, we should structure it so that it is easier to maintain and understand. There will be separate modules for the Pydantic schemas, the SQLAlchemy models, a repository that handles the interaction with the database, utilities to manage the database, and the FastAPI instance itself. All of these will be inside of a parent module containing the FastAPI application.

I’ll put the parent module in a new folder called app. Inside of app, I’ll add a blank __init__.py file to mark the folder as a module.

Database Utilities

Before going further, now is a good time to install the required packages. (The pydantic package is installed as a dependency of fastapi.)

$ pip install fastapi sqlalchemy alembic uvicornNext, create a new file, inside of app, named db.py. This will hold the database utilities. Here is where we will configure SQLAlchemy to connect to a SQLite database. The following code imports several functions from the sqlalchemy module to connect to the database.

from sqlalchemy import create_engine

from sqlalchemy.orm import DeclarativeBase, sessionmakerThe create_engine function will return a SQLAlchemy Engine which is used by the sessionmaker function to create a session factory. The sessions will be used in the application to query the database.

DATABASE_URL = "sqlite:///./todo.db"

engine = create_engine(DATABASE_URL, connect_args={"check_same_thread": False})

Session = sessionmaker(autocommit=False, autoflush=False, bind=engine)As SQLite is a file-based database, the connection string is just a file path. The three slashes after the protocol name mean that this is a relative path. So relative to the current path SQLAlchemy will look for a file named blog.db. I won’t go into great detail about the check_same_thread argument. It’s something that is specific to the combination of SQLite and FastAPI. However, it is intended to prevent conflicts when accessing the database from different threads.

The sessionmaker function binds to the engine to create sessions to interact with the database. The autocommit and autoflush keyword arguments give you absolute control when persisting data to the database. In web applications, it is best to keep control so that you ensure database operations are completed in the context of a request. Thus these values are set to False.

You’ll also need a base class from which to derive the SQLAlchemy models. For this demo, you can just derive a class from DeclarativeBase. For more complex applications you might include configuration and other setup.

class Base(DeclarativeBase):

passPydantic Schemas

We’ll come back to the db module later. Let’s work on the models and the schemas. The schemas you saw in the previous post. These are classes, derived from the Pydantic BaseModel, that represent the structure of the data inside of the FastAPI application, as opposed to the SQLAlchemy models that represent the structure of the database.

Inside of the app module folder, create a new file schemas.py, and import the BaseModel from the pydantic module.

from pydantic import BaseModelThe implementation of the TodoItem schema starts off the same.

class TodoItem(BaseModel):

id: int

task: str

complete: bool

class Config:

orm_mode = TrueThe Config inner class is used to set addition configuration options for how the schema should behave. In this case, orm_mode makes is easier to convert between the Pydantic schema classes and the SQLAlchemy model classes that will be created in the next section.

But first, go ahead and add the two DTO schemas for creating and updating TodoItems.

from typing import Optional

class CreateTodoItem(BaseModel):

task: str

class UpdateTodoItem(BaseModel):

task: Optional[str] = None

complete: Optional[bool] = NoneAs these are unchanged from the previous post I won’t discuss them further. And that’s all for the Pydantic schema classes!

SQLAlchemy Models

There is only one model class for this application. Put it in new Python file, models.py, in the app module folder.

In SQLAlchemy, a model class derives from the base class generated by the declarative_base factory function earlier from the db module. In the model class, you use types from the sqlalchemy module to define the mappings to columns in a database table. Here are the required imports.

from sqlalchemy import Integer, String, Boolean

from sqlalchemy.orm import Mapped, mapped_column

from .db import BaseIn the model class, a database table column is represented with the Mapped class which use Python type hints. These are generated with the mapped_column function.

class TodoItem(Base):

__tablename__ = "todo_items"

id: Mapped[int] = mapped_column(Integer, primary_key=True)

task: Mapped[str] = mapped_column(String)

complete: Mapped[bool] = mapped_column(Boolean, default=False)Keyword arguments are used to mark the id column as the primary key and False as the default value for the complete column. Also, notice the __tablename__ field. This is used to override the name of the table that maps to this class. If you omit it, SQLAlchemy will generate a name based on the name of the model class.

And that’s it for the SQLAlchemy model classes!

Model Repository

The repository module contains functions that communicate with the database and handle converting between Pydantic schema classes and SQLAlchemy model classes. Add a new Python file, repository.py in the app module folder.

All database interaction is done via a SQLAlchemy Session. And you’ll need the models and schemas too.

from sqlalchemy import select

from sqlalchemy.orm import Session

from . import models, schemasThe first function will use a Session to retrieve all TodoItems from the database.

def get_todo_items(sess: Session):

stmt = select(models.TodoItem)

return sess.execute(stmt).scalars().all()The next function gets a TodoItem by its id. It actually gets the first TodoItem with the id. But since the id is the primary key it is unique and the first one is the only one.

def get_todo_item_by_id(sess: Session, todo_id: int):

stmt = select(

models.TodoItem

).filter(

models.TodoItem.id == todo_id

)

return sess.execute(stmt).scalar_one_or_none()The create_todo_item function also accepts a CreateTodoItem schema. It uses the task to create a new TodoItem model object, then adds it to the Session and commits the change to the database. Before returning the new TodoItem the Session refreshes it to make sure the id value is included in the model.

def create_todo_item(

sess: Session,

create_todo: schemas.CreateTodoItem

):

todo_item = models.TodoItem(task=create_todo.task)

sess.add(todo_item)

sess.commit()

sess.refresh(todo_item)

return todo_itemThe update_todo_item function doesn’t need much explanation. First, use the get_todo_item_by_id function to get the TodoItem. Then use the UpdateTodoItem schema to update the values in the TodoItem. Then commit and refresh as in the create_todo_item function.

from fastapi import HTTPException

def update_todo_item(

sess: Session,

todo_id: int,

update_todo: schemas.UpdateTodoItem

):

todo_item = get_todo_item_by_id(sess, todo_id)

if todo_item is not None:

if update_todo.task is not None:

todo_item.task = update_todo.task

if update_todo.complete is not None:

todo_item.complete = update_todo.complete

sess.commit()

sess.refresh(todo_item)

return todo_item

raise HTTPException(status_code=404, detail="Todo item not found")Deleting an item is simple. Again, use the get_todo_item_by_id function to get the TodoItem. Call the delete method on the Session and pass it the TodoItem. Then commit the change. There is nothing to be refreshed as the TodoItem was not modified.

def delete_todo_item(sess: Session, todo_id: int):

todo_item = get_todo_item_by_id(sess, todo_id)

if todo_item is not None:

sess.delete(todo_item)

sess.commit()

return todo_item

raise HTTPException(status_code=404, detail="Todo item not found")With the repository module done we can move on to the FastAPI application in the main module. But first, we need to finish up the db module.

Dependency Injection

Warning: this might get a little abstract because there is a lot of hand waving going with between Python and FastAPI. But you don’t need to worry about it. I’ll just go over the concepts.

Again, all interaction with a database happens via a SQLAlchemy Session. The sessionmaker factory function was used in the db module to create a session factory. But those sessions have to be managed. This is where dependency injection in FastAPI and generators in Python come into play.

In the db module, add a function to create and manage a SQLAlchemy Session.

def get_db():

db = Session()

try:

yield db

finally:

db.close()On line 2, the Session factory creates a new SQLAlchemy Session. By convention this is called db. Technically it is a connection to the database. Inside of the try block on line 4 the Session is yielded meaning it is returned to the caller but the function does not return. As the yield is inside of a try block, when the yielded Session goes out of scope (ie. the request using it is done) control will return to the get_db function. As there is no more code in the try block, the finally block will close the Session.

TLDR: you don’t have to worry about remembering to manually close the Session!

It gets better. You don’t even have to call the get_db function in the FastAPI handler function. Instead you use the dependency injection mechanism in FastAPI. When provided with a generator function (such as get_db), FastAPI will make the yielded value available inside of the handler function. Let’s see how this works.

FastAPI Application

First we need a number of imports. The FastAPI application and the HTTPException need to be imported from the fastapi module. Also, from the fastapi module, comes the Depends function. In a minute you’ll see how this implements dependency injection with the get_db function that is imported from the db module. And for type hints, bring in the Session class from SQLAlchemy. To do the work of the database, we need the models, schemas and repository modules.

And don’t forget to create a FastAPI application!

from fastapi import FastAPI, Depends, HTTPException

from sqlalchemy.orm import Session

from . import models, schemas, repository

from .db import get_db

app = FastAPI()The first endpoint will get all TodoItems from the database.

from typing import List

@app.get("/todos", response_model=List[schemas.TodoItem])

def get_todo_items(db: Session = Depends(get_db)):

return repository.get_todo_items(db)Here we see the first use of the Depends function. The get_todo_items repository function needs a SQLAlchemy Session. The handler function accepts one. But we don’t call that function explicitly, FastAPI does in response to an HTTP request. Therefore, the Depends function calls a factory function and passes the yielded value to the handler function. In other words, Depends calls get_db and passes the yielded Session to the get_todo_item handler function as the db parameter. Inside of the handler function, the get_todo_items repository function uses the Session to query the database. When the get_todo_items repository function returns, the finally block in the get_db function will close the Session acquired via the Depends DI function.

TLDR: you don’t have to worry about remembering to manually close the database Session!

Looking at the rest of the handler functions, you’ll notice they follow the same pattern. Get a database Session via dependency injection, use it to call a repository function, return the results to the caller or raise an exception. Here is the handler function to get a TodoItem by id.

@app.get("/todos/{todo_id}", response_model=schemas.TodoItem)

def get_todo_item_by_id(todo_id: int, db: Session = Depends(get_db)):

todo_item = repository.get_todo_item_by_id(db, todo_id)

if todo_item is None:

raise HTTPException(status_code=404, detail="TodoItem not found")

return todo_itemAnd the remaining function handlers should be self explanatory given the context of the previous post.

@app.post("/todos", response_model=schemas.TodoItem)

def create_todo_item(

create_todo: schemas.CreateTodoItem,

db: Session = Depends(get_db)

):

return repository.create_todo_item(db, create_todo)

@app.put("/todos/{todo_id}", response_model=schemas.TodoItem)

def update_todo_item(

todo_id: int,

update_todo: schemas.UpdateTodoItem,

db: Session = Depends(get_db)

):

updated_todo_item = repository.update_todo_item(

db,

todo_id, update_todo

)

if updated_todo_item is None:

raise HTTPException(status_code=404, detail="TodoItem not found")

return updated_todo_item

@app.delete("/todos/{todo_id}", status_code=204)

def delete_todo_item(todo_id: int, db: Session = Depends(get_db)):

deleted_todo_item = repository.delete_todo_item(todo_id, db)

if deleted_todo_item is None:

raise HTTPException(status_code=404, detail="TodoItem not found")

returnThe application is ready to run … except for one thing.

Database Migrations with Alembic

There is no database! Let’s fix that.

It is possible to create the database in code. Given an instance of a SQLAlchemy Engine you could do this in the main module.

Base.metadata.create_all(bind=engine)And it will generate a database with a table for each model class deriving from Base. But when you start making changes to the data model, this method can cause issues. A better choice is to use migrations. Alembic is a tool that will detect changes in the data model of your application and generate Python scripts to manage those changes. Each change is called a migration. If you find that a migration doesn’t work, you can roll back to a previous one. It might sound like a lot of work and on the part of Alembic it is a lot of work but it’s work you don’t have to do.

Make sure that you installed the alembic package. This will install a command line app that you run to scaffold out some code Alembic needs for configuration. Run the command in the project root (the parent folder of the app module).

$ alembic init alembicThe alembic command will create an alembic.ini file and a folder named alembic. You’ll need to modify two files that were generated by alembic. First in alembic.ini, find the sqlalchemy.url key and set it to the connection string to the SQLite database.

sqlalchemy.url = sqlite:///./blog.dbNext, in env.py, in the alembic folder, import the Base SQLAlchemy model class from the app.db module, and the models module itself.

from app.db import Base

from app import modelsFinally, in env.py, find the target_metadata variable and set it to Base.metadata.

target_metadata = Base.metadataSave everything and go back to the command line to create your first migration.

$ alembic revision --autogenerate -m "Initial migration"This will create a new migration with the description “Initial migration”. In the alembic folder, in the versions folder, a new Python script has been generated to add the todo_items table to the database which will map to the TodoItem SQLAlchemy model.

To apply the migration run the upgrade command.

$ alembic upgrade headNotice in the project root (alongside the app and alembic folders) is a new blog.db SQLite database.

Take It For a Spin

Now we’re ready to run the application. From the project root, start the application with uvicorn.

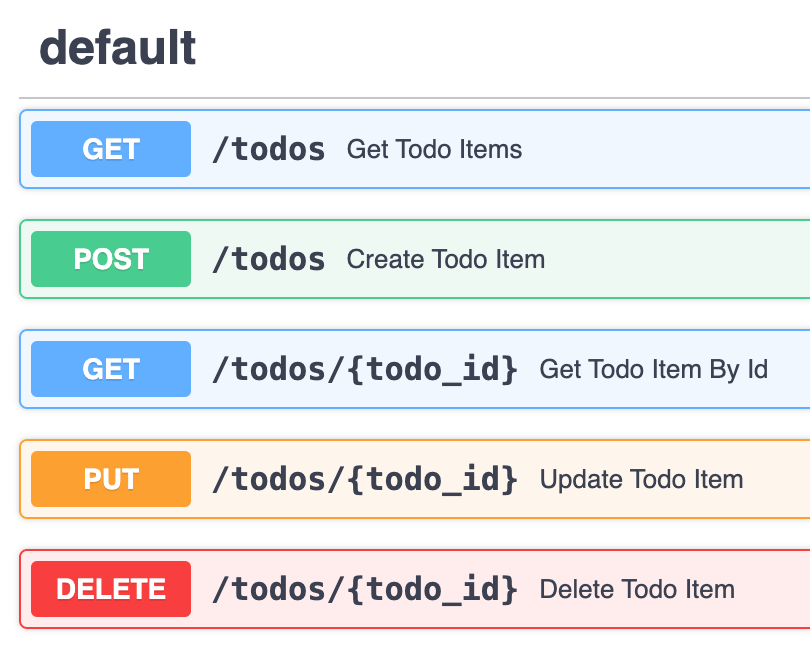

$ uvicorn app.main:app --reloadNavigate to http://127.0.0.1/docs in the browser. You should see five endpoints.

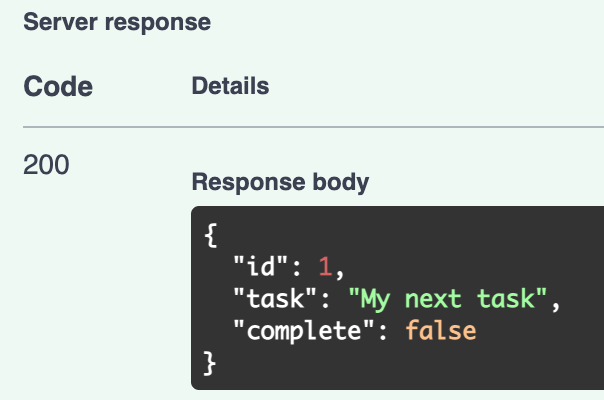

Expand the POST endpoint. Click the Try it out button and provide a task in the JSON payload. Click the Execute button and scroll down to see the JSON for the new TodoItem in the database.

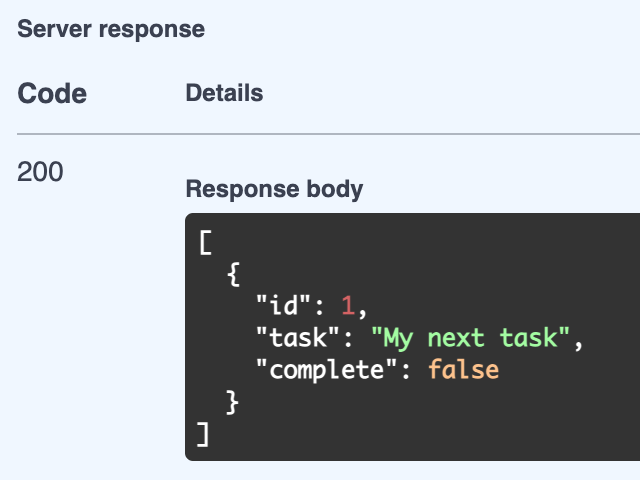

Expand the GET endpoint to get all TodoItems and execute it. The TodoItem you just created is returned.

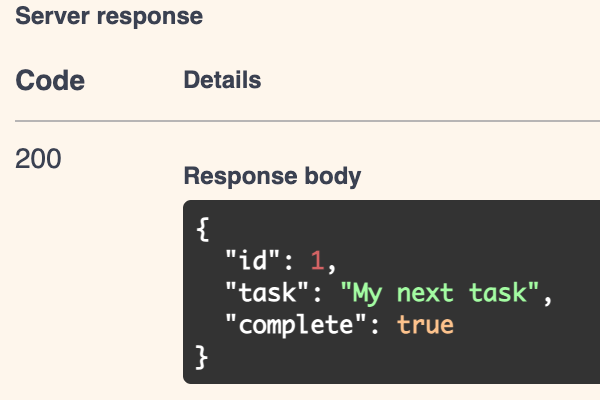

Now expand the PUT endpoint. Enter 1 for the todo_id. In the request JSON, remove the task key and set the complete key to true. Execute the request and notice in the response JSON that complete is now true.

Go back to the GET endpoints to get all TodoItems and notice it has been updated in the database.

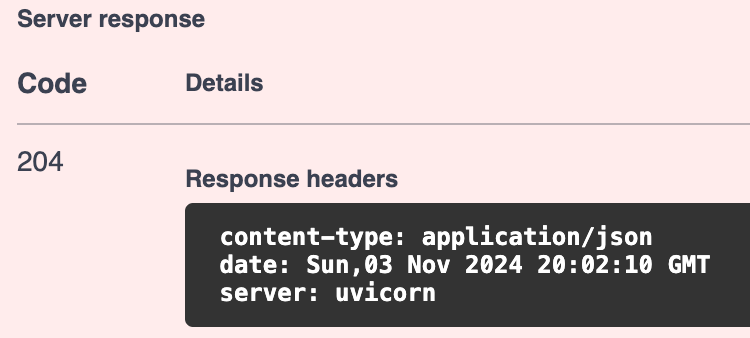

Expand the DELETE endpoint. Enter 1 in the todo_id input box, and execute it. The response is a 204 status code with no body content.

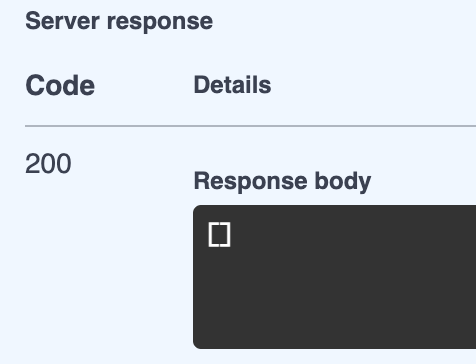

For a sanity check, use the GET endpoint to get all TodoItems and the result should be an empty JSON array as the only item in the database was deleted.

Summary

in this post you saw how to use SQLAlchemy to integrate FastAPI with a SQLite database. You saw how to create SQLAlchemy model classes and map them to Pydantic schema classes. To manage SQLAlchemy Sessions, you used FastAPI’s dependency injection. And you used Alembic to manage changes to the data model and sync them to the database.